Data Quality: BigDaPTOOLS

Big Data Preprocessing Software: Models and Tools to improve the quality of data

We live in a world where data is generated from a multitude of sources, and it is really cheap to collect and store that data. However, the real benefit is not related to the data itself, but to the algorithms that are able to process the data in a tolerable time, and extract valuable knowledge from it. Therefore, the use of Big Data Analytics tools provides very significant advantages for both industry and academia.

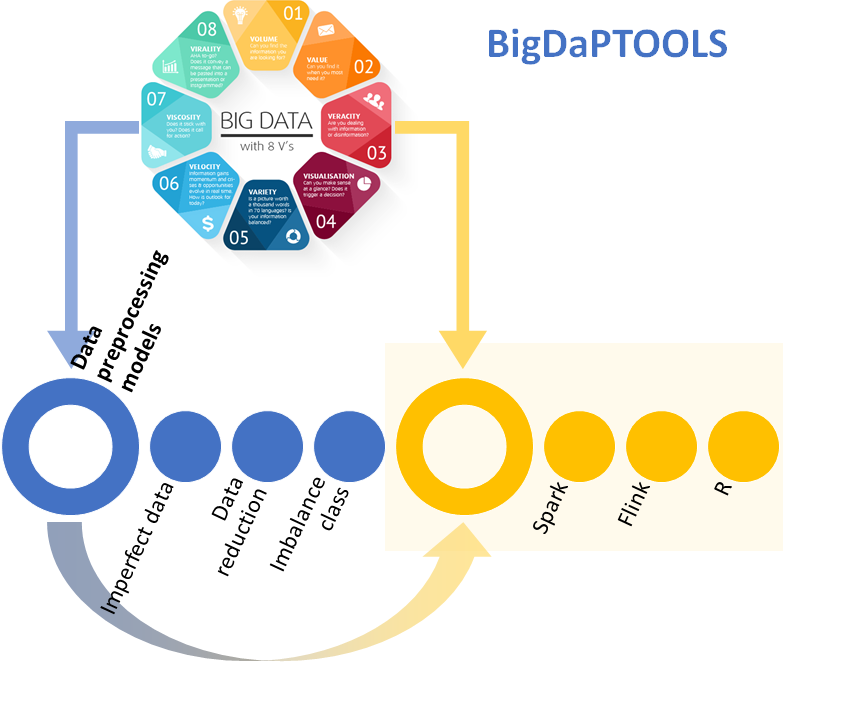

Data preprocessing techniques are dedicated to correcting or mitigating errors in the data. The reduction of dimensionality, instances, noise filtering and discretization are examples of the most widespread data preprocessing techniques. Although we can find many theoretical proposals for data preprocessing and Big Data, there is little software development dedicated to the free availability of algorithms in Big Data environments.

BigDapTOOLS is a package of tools born with the objective of providing and unifying software developments related to data preprocessing and Big Data. This project began with funding from the BBVA Foundation. To date, we have several developments carried out on three well-known Data Science platforms, although the package will continue to grow in the coming years. The most noteworthy developments are:

- Software in R. These algorithms address problems such as data reduction with autoencoders, data preprocessing for imbalanced data sets, ordinal and noisy data, as well as a general purpose library for data preprocessing called ‘smartdata’, which collects the state of the art algorithms for data preprocessing in R, being a container of algorithms that provides a uniform interface to other packages. (https://sci2s.ugr.es/BigDaPR)

- Software in Spark. Apache Spark is an open source engine developed specifically to handle large-scale data processing and analysis. The developed software is available in Spark Packages and contains a set of data preprocessing algorithms for feature selection, discretization, noise filtering and imputation of missing values. (https://sci2s.ugr.es/BigDaPSpark)

Software on Flink. Apache Flink is a recent and novel Big Data framework which used the MapReduce paradigm, focused on distributed processing of data in flow and in batches. This library contains six of the most popular data preprocessing algorithms for data streams, three for discretization and the rest for feature selection. The associated work is in: (https://arxiv.org/abs/1810.06021)

For more information visit this tematic website https://sci2s.ugr.es/BigDaPTOOLS

Period

October 2016 – Present

Researchers

Francisco Charte, Alberto Fernández, Francisco Herrera, Salvador García, Julián Luengo, Sergio Ramírez, Diego Jesús García, Ignacio Cordón.